7 Best Practices for Session Evaluations at Your Next Conference

Well-organized conferences typically have an evaluation form that attendees mark up, at the conclusion of a learning session, to rate presenters and provide other feedback. Response rates tend to be lackluster, and when attendees do leave responses, it’s not always helpful for anyone.

At Conferences i/o, we’ve been studying how our customers use session evaluations, and with hundreds of thousands of attendees worth of experience across hundreds of events, we’ve prepared a set of best practices you can follow at your next event, to make sure you get the most out of session evaluations.

Make the evaluation easy to complete

It’s an old saying, but if you want someone to do something, make that action drop-dead simple. Simplicity is an advantage of paper forms; despite being environmentally wasteful and time-consuming to tally afterward, it doesn’t get any simpler than putting a paper form in front of attendees.

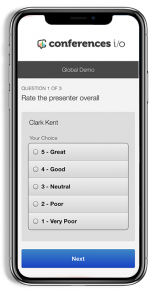

Quick plug: with Conferences i/o, attendees can conveniently complete session evaluations right after they use our audience engagement platform to ask questions and respond to polls. That’s one reason why our digital session evaluations have higher response rates than traditional paper forms.

Keep the evaluation brief, in length

Usability data universally shows that the fewer fields a form has, the more likely someone is to complete it. While there are tricks to making a longer survey feel short, nothing helps quite like actually keeping it short. So think about what you want to get out of the survey and stick to what’s absolutely necessary.

Put thought into your session evaluation questions

I’ve attended dozens of events over the last decade, and at more than a few, it seemed like the event’s organizers downloaded a generic session evaluation template and swapped out the logo. When I see this, I put about as much effort into responding as the organizers did putting it together.

When you take the time to customize questions for your event’s audience, you will generally get better response rates and better data. Think through what you want to get out of the evaluation. If it’s a tool to help presenters, focus the questions on how the presenters can improve. If you want to gauge usefulness, keep the questions centered around what the audience got out of the session.

Set aside time to complete evaluations

A very common reason for low session evaluation response rates is that little or no time is left at the end of the session. Presenters and room moderators are often left with a sheepish look on their faces, half-heartedly reminding attendees to complete the session evaluation form. The trouble, obviously, is that the session has ended, and the audience wants to get out to their break, or head over to lunch, or hit the road after a long day.

But also be careful about forcing evaluations on attendees. If your crowd obviously wants to get out of there, you can always remind them at the start of the next session to complete evaluations for the last session.

Incentivize completion of session evaluations

Another creative way to get around low response rates is to create incentives for attendees. If your event has sponsor giveaways, the raffle tickets could in part come from how many evaluations an attendee completes. If a giveaway prize isn’t available, a simple audience honor like calling out the top evaluation performers, could be enough.

Alternatively, you can appeal to an attendee’s sense of duty. When asking attendees to complete a session evaluation, remind them that the evaluations are a great benefit for presenters, and for the organizers to make the event better next time around.

Make evaluations anonymous by default

A common phenomenon biasing session evaluations is a scenario where participants respond overly positive, because they think that’s what organizers and presenters want to see. It’s called good-participant bias. At a restaurant, it’s a bit like telling the waiter that the food is great, even though you think it’s mediocre.

Good-participant bias can be avoided, in part, by ensuring attendees that their feedback is being recorded anonymously. This is particularly important when anonymity is high-stakes, like at a corporation’s internal employee conference. (Would you be willing to assign negative ratings to an executive at your company, if your name was rubber stamped on the session evaluation?)

We recommend making anonymity the default, and, if necessary, allowing attendees to opt-in to identifying themselves.

Note: If you are incentivizing evaluations, you have to record who has been submitting them. Make sure you explain that identifying data will be discarded following the incentive’s culmination.

Finally, use the feedback

If attendees are spending a few minutes per session completing your evaluations, they’d absolutely appreciate seeing their suggestions recognized and acted upon.

After the event concludes, and after you have had a chance to compile responses, you can send an update to attendees explaining what you heard and how you plan to address it for the next event. This shows attendees that you value their feedback and that you’re committed to improving. This is also a step toward creating a community around your event (communities are powerful). Just make sure you follow through on improvements.

Putting it all together

-

- Make it drop-dead simple for attendees to submit feedback.

- Only ask what needs to be asked (keep it short).

- Ask questions that meet your goals.

- Set aside session time (not attendee time) for evaluations.

- Consider incentivizing the completion of session evaluations.

- Make anonymity the default.

- Complete the circle by acting upon feedback.